Counting is Hard

by Michael Brundage Created: 11 May 2014 Updated: 20 May 2014

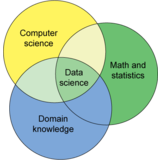

In my experience, the vast majority of business analysis tasks do not require advanced data science or machine learning. They really just require counting and presenting those counts in a way that has impact.

Most of us learned how to count before kindergarten, and yet, counting is surprisingly hard at scale. When I worked at Yahoo, a senior Director told me they did not have an accurate count of how many machines they had in their data centers. This boggles the mind of many laypersons, but is completely unsurprising to most engineers.

Many factors can make it difficult or impossible to count perfectly accurately:

- Errors. Sometimes your counts are off because you’re not doing it right.

- Sampling. Sometimes you’re intentionally sampling. You can extrapolate to a full count, but that’s inexact.

- Throttling. Sometimes, you’re throwing away a non-random fraction of data. These counts are lost.

- Definitions. What exactly are we counting?

- Filtering. You may filter out data that is malformed or satisfies other conditions. Websites filter out robots, for example.

Some of these can be mitigated or controlled for, or have unintended consequences, and that’s what I want to talk about here.

Errors

Counting errors happen, despite our best intentions. Because of this, it’s really important to have as many checks and tests as possible, to add regression tests to catch every error ever encountered (because those errors will occur again), and to have secondary counts (where possible) that are computed in a different way to which you can compare and validate (cross-checks).

Errors will still occur, and there are several corrective steps you can take:

- You can backfill, if the raw data before the error occured is still available.

- You can approximate, if there is a cross-check or other secondary source from which you can approximate.

- You can throw out the bad counts.

What you should not do, is leave the bad counts in place and email everyone to let them know the counts are bad. Because, guess what, data propagates. Someone, somewhere, is going to use that bad data without realizing it, and may make bad business decisions based on it.

Because errors happen, and because you will need to fix or discard the errneous data later, every data system should be designed with a way to signal that its data has changed so that all downstream calculations can recompute with the fixed/discarded data. If you’re reviewing a new system that lacks this capability, call it out.

Sampling and Throttling

Sampling, assuming it’s done right, introduces sampling error and changes counting from exact arithmetic into significance arithemetic. For example, if you sample at a rate of 1/10, then 10 + 10 is no longer 20. It’s really a distribution over the interval [2,38]. Summary statistics over sampled data have an error term that can be quite large, depending on the statistic, the sampling method, and the population distribution.

Sampling done correctly makes most analyses more complicated, and definitely complicates how you communicate your results. However, in my experience, sampling is rarely done correctly.

First, you need a high-quality random number generator with a period greater than the population size and no problematic statistical correlations. The current state-of-the-art is the WELL generator.1 Sampling with a standard library routine like rand() is is usually a bad idea, unless the underlying implementation uses a good RNG like SFMT (like C++11, Boost, Python). (Note: generating random numbers is hard for most developers to get right. Even the comp.lang.c FAQ had it wrong for awhile. I’ve seen statistical effects from bad RNGs in my work, so be careful.)

Second, sampling must be applied after all other conditionals. If you sample and then apply additional filtering, your sample is statistically biased. For example, suppose you randomly select 1/10 of all traffic to a website, and then exclude all requests with no cookies. You no longer have a uniformly random sample of all traffic. If instead you filter out all requests with no cookies, and then randomly select 1/10 of the remaining requests, you have a uniformly random sample of all eligible traffic (but not of all traffic).

Third, throttling is not sampling. Throttling is a form of clamping: When some resource constraint is exceeded, you throw away all (or most) samples. For example, if a load balancer become saturated, a service might through out all incoming requests. The problem is that this is a non-uniform sampling method. For example, if you drop samples when your system becomes overloaded during peak activity, you will have a sample biased towards off-peak behavior. Throttling almost always introduces selection bias.

Any time you are working with a sampled data set, you should go deep into the implementation to verify that sampling was implemented correctly.

You can also check that sampling is idempotent with respect to distributions (because sampling should only scale the distributions, not alter their shape, as long as you remain above a minimum bin count threshold). You can verify idempotence in two ways: (1) Obtain an unsampled dataset and compare attribute distribution between the full population and the sampled population. (2) Apply the same sampling a second time, and compare the data that was sampled once with the data that was sampled twice.

When you have data that was mis-sampled, you can sometimes correct for the sample error, as long as enough data from every attribute group is still present. This is done by either introducing weights in subsequent calculations, or else re-sampling with a custom distribution that undoes the bias and gets you back to a uniform distribution. Both of these are error-prone; it’s almost always better to fix the sampling error and re-measure. If the sampling error was small and you just need directionally correct results, you may still be able to use the data.

Definitions

There’s this funny thing where by giving something a name, we change how people perceive it. For example, the original Xbox, Xbox 360, and Xbox One all share the name “Xbox,” so we perceive them as all being related somehow. But the underlying hardware is completely different, the operating system has evolved tremendously, the user experience is different… What they have in common is a brand name.

I guarantee you, every website has a different definition of “hit” and “unique user.” Do these counts include known or probable robots? Do they include requests that were closed before the entire response was transmitted? How was “unique” determined? (Across all datacenters? Hourly or daily? In which timezone(s)? How was clock skew handled?)

The truth is, every metric has a nuanced definition. Let’s count people by gender. What exactly is gender? Male, female… transsexuals? chimeras? Facebook now has 56 choices for gender. What exactly is a person? Do you have a minimum age limit? Do you count fetuses? Are conjoined twins one or two people? Are you really counting people, or some proxy for people such as social security number or login ID?

So, it’s really important to give metrics crisp definitions. Go overboard on being as clear as possible about what is, and is not, included in a count. Anticipate possible sources of confusion and work them out.

If you’re using data defined by someone else, dig into the implementation to discover exactly what its definition is. If you’re getting someone else to implement a new metric for you, be crystal clear on what that metric should measure, and review the implementation to make sure it matches your understanding.

Most of the time you won’t need to think about all these nuances, but in my experience, sometimes they will significantly affect the results of an analysis.

Have one authoritative source for metrics documentation, so that when definitions (inevitably) change, you don’t end up with a dozen contradictory definitions all over the place and mass confusion. When definitions change, if the changes are across multiple systems and the switch to the new definition is not coordinated, there will be a period of time when those systems are using different definitions for a metric. Sometimes this mismatch can cause problems for analysis.

Avoid giving metrics overly simplified names when they have a lot of nonobvious nuances that affect how the metric will be interpreted by non-experts. Metrics like “customer abandonment” and “visits” are especially ripe for misunderstanding. It would be better to give these metrics more descriptive names like “page unload() before page load()” or “approximate page sequences.”

Filtering

All data is filtered. In large systems, it’s filtered in multiple places. Malformed data usually can’t be used by anyone, and is removed. A website may remove known and probable robots.

Data should be filtered as early as possible, so that it’s clean for all downstream consumers (and to help reduce its size). At NASA, we had level 0 telemetry, which was the raw packet stream from a spacecraft (sometimes out of order or duplicate or malformed), and then almost immediately this was reduced to level 1 telemetry. Level 1 telemetry was 1000x smaller in size and contained only scientifically useful data. And additional levels of filtering were applied, sometimes through level 3 or 4. Each level of data had a different audience.

When you filter data, always count how much data was filtered and why, and then monitor those counts. When you see an anomalous data pattern in a metric, the first thing you should check are the filter counts. I once saw a data stream suddenly go from nearly 0% filtered to more than 90%. Whoops!

Filters can introduce sample bias, if applied after sampling or after A/B experiment assignment. Filters can also suffer from type-I and/or type-II errors.

For example, website robots are not perfectly recognized. A filter to remove robots will still let some robots through and will also erroneously remove some non-robots. I once ran an experiment that massively reduced customer abandonment. Customers who abandoned were being inadvertently classified as robots. Robots were filtered out of experiment data. Consequently, in control the data was being filtered out and in treatment it was not, badly skewing the experiment results.

Conclusion

Counting is hard, but critically important to data science. Pay attention to the details of how your counts were obtained, and scrutinize those methods for errors. It’s always better to say “Our data is bad, we have to wait for better data” than to publish a result with erroneous conclusions because it was based on bad data.

-

A somewhat recent survey of PRNGs is Random Number Generation, Chris Lomont, 2008. ↩